Why Computer Vision is a Big Deal in Medical Imaging

Since ChatGPT dropped in November 2022, it feels like the entire AI world has been obsessed with large language models. It's become a massive arm race since then, with big companies throwing massive compute and money at transformers. To be clear, LLMs are cool and all, but there's this whole other side of AI that's not getting nearly enough attention, especially where it matters most: in areas that directly affect human lives.

Medicine is one of those areas. And honestly, it's kinda crazy how little people talk about it compared to chatbots. While transformer models are dominating headlines and research budgets, there's been this quiet revolution happening in medical imaging that's actually saving lives right now. Different areas of science, especially at the intersection with machine learning, have massive demand for practical AI deployment, but somehow it's just not part of the conversation.

The Real Problems in Medical Imaging

Think about what radiologists do every day. They're sitting there looking at thousands of X-rays, CT scans, MRIs, trying to catch abnormalities that could be life-or-death situations. And they're dealing with some serious challenges that AI could actually help with.

Human error is inevitable. When you're reading your 200th scan of the day, fatigue kicks in. Your attention wavers. Things get missed. It's not that radiologists aren't skilled, it's that they're human, and humans have limits.

The process is also just slow. Analyzing complex medical images takes time, real time, and that creates bottlenecks throughout the entire healthcare system. Patients wait longer for diagnoses. Treatment gets delayed. In emergency situations, those delays can be critical.

Then there's the shortage problem. We don't have enough radiologists, especially in developing countries and rural areas. There just aren't enough specialists to go around, which means some patients don't get the level of care they need. And even when you have multiple radiologists looking at cases, consistency can be an issue. Different doctors might interpret the same scan differently, which creates uncertainty.

This is where computer vision becomes a game-changer. Not to replace doctors, that's not the goal, but to augment them. Give them better tools. Help them work faster and more accurately. Catch things they might miss.

Building SK.AI: The Technical Challenge

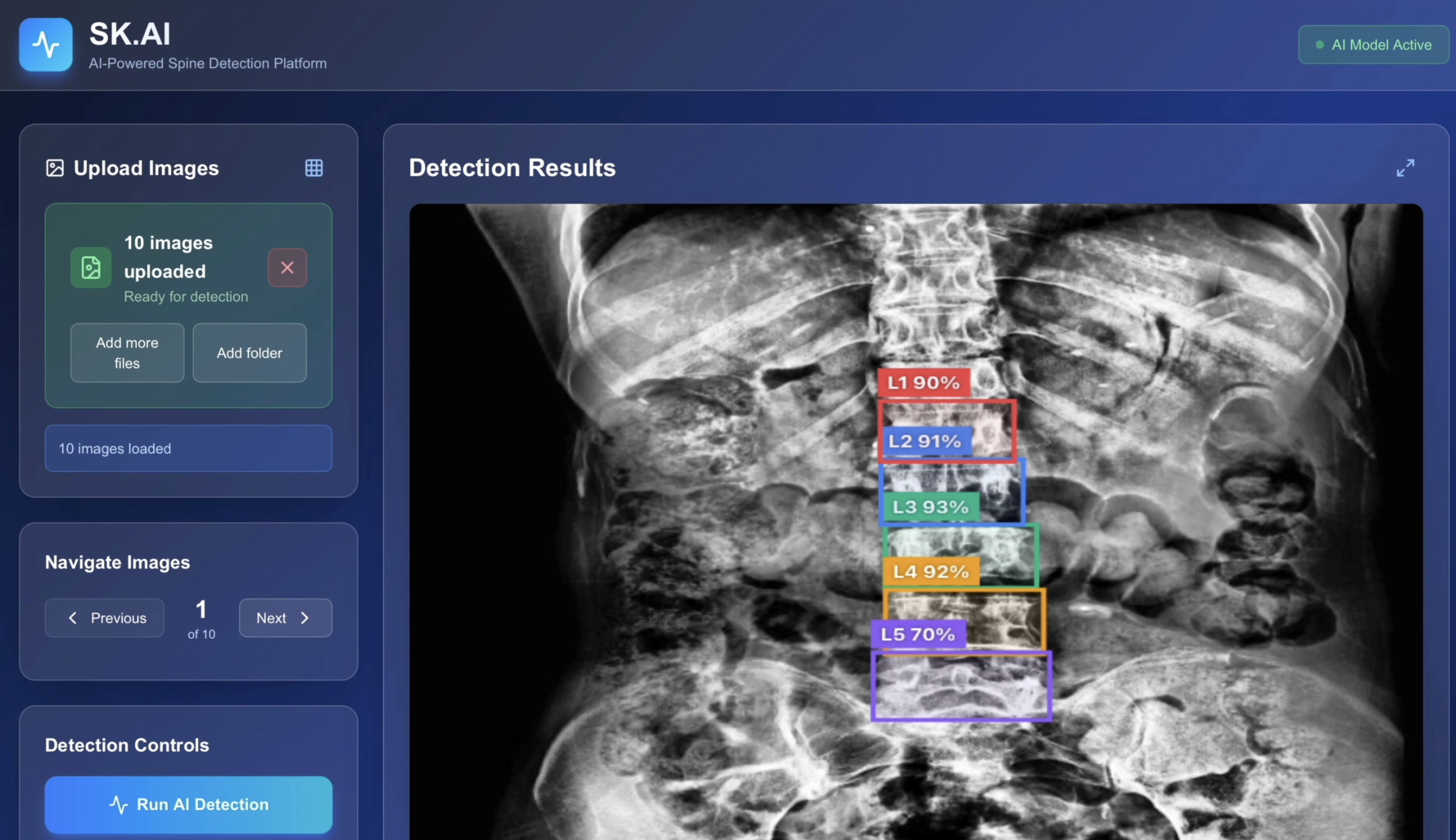

During my internship as a Computer Vision Engineer at Sky Long AI, I got to work on something that's pretty cool. SK.AI is an AI platform that automatically detects lumbar spine vertebrae in X-ray images, and building it taught me a ton about what it actually takes to deploy medical AI in the real world.

The core challenge is identifying five vertebrae, L1 through L5, with super high precision. That might sound straightforward, but there's a lot that makes it tricky. Image quality is all over the place. X-rays vary wildly in contrast depending on the machine, how the patient was positioned, the technician's settings, noise levels in the environment. You're not working with clean, standardized data.

Speed matters too. In a clinical setting, you can't make doctors wait around for minutes while your model processes an image. You need results fast, ideally under a second, so it integrates seamlessly into their workflow. And privacy is critical. Patient data needs to stay secure. HIPAA compliance isn't optional, it's the law, and violating it can shut you down immediately.

But the biggest constraint is accuracy. In medicine, "pretty good" isn't good enough. You need near-perfect precision because the cost of false negatives or false positives is real. Miss a vertebra, and you might miss a fracture. Flag too many false positives, and doctors stop trusting your system.

How I Solved It: YOLOv11 + Client-Side Inference

I went with YOLOv11, the latest version of the YOLO object detection framework at the time, and fine-tuned it specifically for medical imaging. YOLO stands for "You Only Look Once," and the whole idea is that it can detect objects in images super fast by processing the entire image in a single pass through the network, rather than sliding windows or region proposals like older approaches.

I trained the model on a dataset of 15,553 spine X-rays with manual annotations, using YOLOv11n, the nano variant optimized for speed. The final model hits 97.9% mAP50 and 86.7% mAP50-95, which basically means it's really accurate at detecting vertebrae. And it runs fast, around 150 milliseconds per image, which is well within the acceptable range for clinical use.

But here's the part I'm most excited about: everything runs client-side using ONNX.js. That means the entire inference pipeline happens in your browser. Zero patient data gets sent to any server. It's fully HIPAA-compliant by design, because the data never leaves the user's machine. You don't need expensive GPU servers or cloud infrastructure. It just works, entirely in the browser.

You can actually try it yourself at https://skai-spine.vercel.app/. Upload an X-ray, and watch it detect the vertebrae in real-time.

Why This Actually Matters Beyond Just Detection

Okay, so we built a model that can find vertebrae. Cool. But why does it matter beyond just the detection itself?

First, it's not about replacing doctors. This is super important to understand. SK.AI isn't trying to replace radiologists, it's giving them a second pair of eyes. It helps them quickly verify vertebrae positions, spend less time on the routine stuff, and focus their expertise on the complex diagnostic decisions. It's about keeping them consistent across thousands of scans when they're tired or overwhelmed.

Second, once you can accurately locate and crop individual vertebrae, you unlock all kinds of downstream possibilities. You can run targeted analysis on specific vertebrae to look for compression fractures, which if caught early can prevent serious complications. You can track degenerative disc disease over time, measuring how it progresses with quantitative metrics rather than subjective assessment. You can measure scoliosis curvature precisely, or spot tumors and lesions in specific vertebrae that might be subtle in the full image.

Third, because everything runs client-side, you're making healthcare more accessible. Small clinics don't need expensive infrastructure. It works even in places with limited resources, like rural hospitals or developing countries. That brings down healthcare costs and means more people can access quality diagnostics.

And finally, the platform supports research and education. Medical students can use it to train with annotated examples. Researchers can use it to study spinal disorders. Developers can build on it to create new algorithms for related problems. It's contributing to the broader open-source medical AI ecosystem.

Computer Vision is Quietly Transforming Medicine

SK.AI is just one example. There's so much happening in medical computer vision that most people don't know about because everyone's focused on ChatGPT and LLMs.

In cancer detection, there are AI systems that find cancerous cells in tissue samples at expert-level accuracy, often faster than human pathologists. Breast cancer screening with AI has way fewer false alarms compared to traditional methods, which means less patient anxiety and fewer unnecessary biopsies. There's even melanoma detection from photos you can take on your phone, bringing dermatology screening to anyone with a smartphone.

Emergency medicine is being transformed too. Rapid stroke detection in brain scans matters because every second counts, and AI can flag potential strokes instantly. Automated detection of fractures and internal bleeding speeds up trauma assessment. COVID-19 and pneumonia detection from chest X-rays became critical during the pandemic and continues to be useful.

Eye diseases are another huge area. Automated diabetic retinopathy screening prevents blindness by catching the disease early. Early glaucoma detection looks for optic nerve damage before patients notice symptoms. Tracking macular degeneration progression helps doctors adjust treatment plans.

All of this is happening right now, in hospitals and clinics around the world, but it's not making headlines like the latest GPT model.

What I Learned Building This

Working on SK.AI taught me some hard lessons about what it takes to build medical AI that actually works in the real world, not just in research papers.

Data quality beats fancy models every single time. You can have the most sophisticated architecture in the world, but if your data sucks, your model will suck. We spent a lot of time on careful data collection and annotation, making sure we had diverse patient demographics represented, using data augmentation to handle weird edge cases, and validating on real clinical data from multiple hospitals.

Trade-offs are everywhere, and you have to navigate them carefully. Accuracy versus speed is a classic one. YOLOv11n hits a sweet spot where it's both fast and accurate enough for clinical use, but that required a lot of experimentation. Model size versus performance is another. Our 11MB ONNX model fits in browsers without sacrificing too much accuracy, but we could have gotten higher accuracy with a bigger model that wouldn't be practical to deploy. And interpretability versus complexity matters more in medicine than most other domains. Doctors need to trust the AI, so you can't just throw a black box at them and expect adoption.

Working with actual radiologists was eye-opening. I learned that confidence scores need to make intuitive sense to them, not just be mathematically correct. The UI has to fit into their existing workflow, or they won't use it. False positives and false negatives have different clinical costs depending on what you're detecting, so you need to tune your thresholds accordingly. And sometimes speed is just as important as accuracy, because if the tool slows them down, it doesn't matter how good it is.

Real-world deployment is way harder than getting good numbers on a test set. You're dealing with browser compatibility issues because not all browsers support WebAssembly equally. Different hospitals use different image formats and resolutions. You need to make sure it works on older hardware that clinics might be using. And you need comprehensive error handling because in medical settings, crashes are unacceptable.

Where This is All Heading

The intersection of computer vision and medical imaging is moving fast, and there's some really exciting stuff on the horizon.

Multi-modal fusion is one direction that's gaining traction. Instead of just looking at one type of scan, you combine X-ray plus MRI plus CT scans to get a more complete picture of what's going on. Each imaging modality captures different information, and AI can integrate them in ways that human doctors struggle with.

3D reconstruction is another big area. Right now, radiologists often work with 2D slices from CT or MRI scans and have to mentally construct the 3D structure. AI can build full 3D models from those 2D slices automatically, which is super useful for surgical planning. Surgeons can literally see the exact shape and position of structures before they make an incision.

Real-time surgical assistance is getting closer to reality. Imagine AR overlays during minimally invasive procedures, showing the surgeon exactly where critical structures are based on pre-op imaging. That's not science fiction, prototypes exist today.

Predictive analytics is maybe the most exciting direction. Instead of just detecting disease now, AI could use imaging to predict how diseases will progress, how patients will respond to different treatments, what their outcomes will be. That enables truly personalized medicine.

The Challenges We Still Face

It's not all smooth sailing though. There are real challenges that need to be solved before medical AI becomes ubiquitous.

Getting regulatory approval is a huge hurdle. FDA and CE marking requirements are strict, and rightfully so, because this stuff affects patient safety. Clinical trials take years and cost millions of dollars. Legal liability is a real concern, who's responsible if an AI makes a mistake, the developer, the hospital, the doctor who used it?

Integrating with existing hospital systems is harder than it sounds. Medical systems like PACS aren't always easy to integrate with, especially older installations. Changes to workflow can be disruptive, and hospitals are risk-averse environments. Training staff takes time and resources that many hospitals don't have.

Ethics and fairness are critical issues that can't be ignored. Algorithmic bias is a real problem, if your training data is mostly from one demographic, your model might perform worse on others. Models need to be transparent and explainable, especially in high-stakes medical decisions. And patient consent and data privacy are not just technical challenges, they're fundamental rights that need to be protected.

There are technical limitations too. Rare diseases don't have much training data, so models struggle with them. Models need to generalize across different hospitals and equipment, which is hard when there's so much variation in imaging protocols. And handling weird edge cases, the stuff that doesn't fit your training distribution, remains an open problem.

The Bottom Line

While everyone's going crazy over LLMs, computer vision is quietly revolutionizing healthcare. The impact is real and measurable. Faster diagnoses mean catching diseases earlier when they're more treatable. Better accuracy literally saves lives by reducing missed diagnoses and unnecessary procedures. Greater accessibility means more people get quality care regardless of where they live. Lower costs make healthcare affordable for more patients.

Building SK.AI showed me that you don't need billion-parameter models or massive data centers to make a real impact. Sometimes the most meaningful AI applications are the ones solving actual problems for actual people, one vertebra, one diagnosis, one life at a time.

Next time someone talks about AI breakthroughs, remember it's not just about what AI can say. It's about what it can see, understand, and help heal. That's the future I want to be part of. That's why I think computer vision in medicine is a way bigger deal than most people realize.

Links and Resources

If you want to try SK.AI yourself, check out https://skai-spine.vercel.app/. The code is open source at https://github.com/choshingcheung/sk.ai.

The main technologies I used were YOLOv11 from Ultralytics, ONNX Runtime from Microsoft, and medical imaging datasets from Roboflow. This post is based on my actual work as a computer vision engineering intern. SK.AI is for research and educational purposes, it's not FDA-approved for clinical use.